The SME Leader's Playbook for Practical AI

By Global Leaders Insights Team

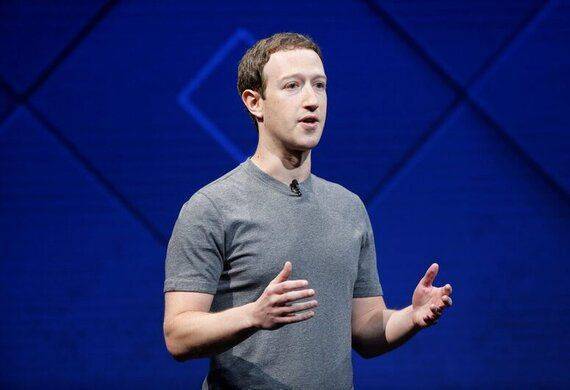

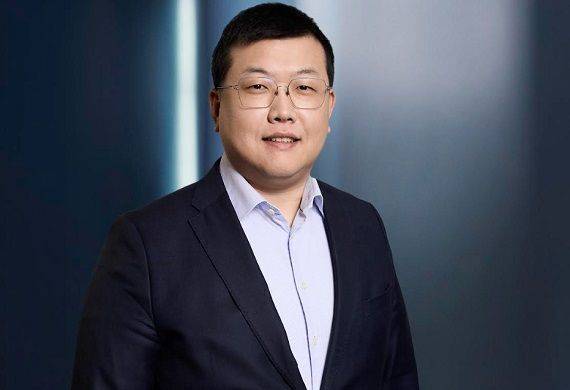

In an exclusive conversation with Global Leaders Insights, Kun Cao, Client Director at Reddal, discusses how AI adoption for SMEs is moving beyond experimentation toward structured, scalable capability. He notes that competitive advantage now comes from disciplined implementation—starting with personal familiarity, then leveraging secure business-grade tools, before integrating AI into real workflows and governance. According to Cao, the winners will be the firms that treat AI as core operating infrastructure, not a short-term novelty. Those that learn deliberately, measure impact, and refine continuously will unlock faster execution, fewer errors, and a culture of improvement competitors cannot quickly replicate.

Artificial intelligence has shifted from novelty to infrastructure. The same assistants that once felt experimental are now embedded in email, documents, browsers, and line-of-business software. For small and midsize enterprises, the strategic question is no longer whether AI belongs to the firm; it is how to adopt it in a way that compounds result instead of producing pilots that stall.

Level 0: personal experimentation to build intuition

Most organizations try to jump straight to platforms and vendors. That is premature. The first job is to give leaders and managers a felt sense of what AI can and cannot do. Treat the tools like you once treated spreadsheets: use them personally, not by proxy. Begin with low-stakes tasks such as rewriting emails, summarizing public articles, outlining a meeting agenda, and practicing three habits that will carry through the journey. Ask clearly for what you want, including audience, tone, and constraints. Check with discipline, verifying facts and reading for logic. Iterate rather than accepting the first draft, because quality emerges back-and-forth. When a critical mass of managers has used AI for real work, can name where it helps and where it fails, and understands basic boundaries on confidential data, the organization is ready for structured use.

Also Read: Beyond Compliance: Driving Security Culture from the Top Down

Level 1: use off-the-shelf tools in daily work

The quickest gains come from applying general-purpose assistants and AI features already present in your software. This is not about custom systems. It is about making everyday work faster, clearer, and more consistent. Focus on recurring, text-heavy tasks in go-to-market, customer support, operations, finance, and HR.

In sales and marketing, use AI to draft and localize copy, assemble proposals from a curated library of approved paragraphs, and prepare concise prospect briefs; measure the outcome in turnaround time and win rate.

In support, let AI draft replies to common inquiries and summarize long threads while agents remain accountable for tone and truth; watch first-response time and resolution without escalation.

In operations, convert workshop notes into standard operating procedures, extract checklists for handoffs, and publish meeting actions that name owners and dates; track time from decision to documented practice and rework incidents linked to unclear steps.

In finance, turn numbers into narrative for management reviews and scenario memos, while keeping verification non-negotiable.

In HR, accelerate job descriptions, interview kits, policy drafts, and staff FAQs, then judge success by time-to-post, early candidate quality, and fewer policy clarifications.

Adoption sticks when AI sits where work already happens. Keep the data boundary conservative, keep humans accountable for outputs, and measure impact with a few hard numbers rather than anecdotes. When those habits take root, AI shifts from curiosity to capability and the firm is ready to professionalize.

Level 2: upgrade to secure, higher performance usage

Unmanaged enthusiasm creates hidden risks. Drafts with customer details slip into consumer tools, quality drifts as some teams accept outputs at face value, and tool sprawl overwhelms training and support. Professionalization starts by standardizing on a compact, business-grade stack: one productivity suite with AI, one general assistant for open-ended work, and a small number of domain tools where value is already proven. Insist on administrative control, including single sign-on, multi-factor authentication, data-use controls that keep prompts and outputs out of model training, configurable retention, audit logs, and explicit data residency. Surround the core with a clearly labeled perimeter for safe experimentation so curiosity survives without compromising control.

Policy becomes practical when it is short, specific, and visible inside the tools people use. Draw a bright line around prohibited data in general-purpose systems, state that the human remains responsible for accuracy and tone, set verification norms for claims and figures, define when and how to disclose AI assistance to clients, and restrict plugins and connectors to an allowlist. Technology and policy do not change behavior by themselves, so assign light-touch roles. An executive sponsor clears roadblocks, functional owners curate prompts and templates and own outcomes, respected practitioners serve as champions who run clinics and surface edge cases, and a security lead approves tools and manages exceptions. Training shifts from generic introductions to hands-on sessions that use the firm’s own documents and workflows, followed by quick clinics to fix what breaks. Avoid the predictable failure modes such as policy theater, over-restriction that fuels shadow IT, prompt sprawl, tool bloat, and automation without ownership, by keeping the stack compact, embedding guidance where work happens, and assigning clear accountability.

You will know Level 2 is in place where most target users complete named tasks with approved tools, guardrails are followed with only minor exceptions, a living library of prompts and templates is actually used, and leaders can point to several workflows with measurable performance gains. At that point, the constraint is proximity to your own data and processes, not hygiene.

Level 3: build internal capability

Level 3 is the pivot from “using AI” to “being good at AI.” Capability begins when AI gets closer to the information and workflows that define your economics. The data job is not a grand transformation; it is making key facts about customers, products, orders, inventory, and projects clean enough and accessible enough to power a few priority use cases. Pick the systems of record that matter, agree on “good enough” definitions for critical fields, consolidate scattered spreadsheets into shared structures, and assign ownership for upkeep. If you cannot answer a basic question about a customer or a transaction without hunting through mailboxes and private folders, fix that first.

Process clarity is the multiplier. AI amplifies whatever it touches; if the workflow is tacit, you will scale confusion. Map the journeys that matter such as lead to revenue, order to cash, ticket to resolution, and write down how they run. Use AI as a scribe to turn notes into draft procedures and translations but keep managers accountable for the truth. Then choose use cases where text, judgment, and data meet in high-frequency work. A proposal assistant that assembles drafts from your own library, a project companion that turns notes and time entries into weekly reports, or a service helper that drafts responses based on your prior resolutions are all credible starting points. Tie each to an existing metric such as cycle time, win rate, first-pass yield, or cash conversion, and stop if it does not move.

Form a small, accountable delivery team. A business owner with authority makes trade-offs. A practitioner with technical fluency stitches tools together and builds simple automations. A frontline voice keeps the work honest. Give them time, a visible backlog, and acceptance criteria. Run short pilots on narrow slices of the workflow, compare performance to the old way with numbers and qualitative feedback, and schedule iteration because the first version will be clumsy. If a pilot clears the bar, deployment is change management: embed steps in the official process, update the procedures, train the next cohort on the use case rather than generic features, and maintain a single place where prompts, templates, and examples live. Resist the urge to scale everywhere at once. Let a handful of well-run deployments create belief and reusable assets, such as a document library, a shared glossary, and a standard connector. As capability accumulates, the culture changes: teams experience AI as something that makes work clearer and results stronger, leaders ask tighter questions about decisions, errors, and customer simplicity, and learning compounds.

Level 3 ends when the organization can repeatedly identify a high-value task, connect it to the right data and process, build a small tool that helps the people who do the work, and deploy it safely into daily operations. At that point you have a capability, not a project.

Also Read: Navigating Mergers and Acquisitions for Strategic Growth

Level 4: scale, govern and continuously improve

Reaching Level 4 is less about adding another tool and more about changing how business learns. Scaling is less about adding tools and more about changing how business learns. Treat successful use cases like small internal products with owners, version histories, and service levels. Build a simple roadmap that aligns a handful of initiatives to the outcomes that matter over the next twelve to twenty-four months, and fund fewer, better efforts with explicit metrics for graduation, iteration, or exit. Keep governance light but firm. Product owners can change prompts, templates, and workflows within boundaries, while anything that touches customer data, pricing, or regulated content gets a quick review from the data and security lead. When models update or outputs drift from the firm’s tone, document the incident, fix it, and fold the lesson back into training and policy. Over time, this creates an institutional memory that prevents repeats and sustains confidence.

Continuous improvement depends on feedback moving faster than habit. Give everyday users a low-friction way to flag what works and what breaks, and ship small fixes quickly so teams see the system is listening. As the portfolio grows, invest in basic data stewardship: stable interfaces, shared glossaries, consistent field names, validation at entry, and sensible archival rules. The point is fewer surprises when workflows cross functions or when a new use case draws on the same sources. Advantages take root when AI fluency becomes part of how the firm hires, promotes, and develops, when communities of practice share patterns in language non-experts can use, and when incentives reward teams that consistently improve a workflow.

Risk management matures in parallel. Test model and plugin updates against a small suite of critical prompts before production, define graceful degradation paths for high-impact workflows, and take a consistent stance on disclosure in external communications so client-facing teams can explain the role of AI without undermining confidence. None of this needs to be heavy; all of it should be intentional.

The payoff appears quietly before it becomes obvious. Customers notice that proposals arrive sooner and feel more tailored, that issues resolve with fewer hops, and that your teams are consistent across languages and channels. Employees notice that documentation matches how the work is actually done, that suggestions are acted on, and that learning the next tool feels like an extension of what they already know. Owners notice that headline metrics move in the right direction and that gains persist when a manager leaves or a vendor retires a feature. In that state, AI is no longer a program to manage. It is infrastructure for learning and a competitive stance rivals cannot copy quickly.

Also Read: Storytelling in B2B Tech

For small or midsize enterprises, the choice is not between adopting AI or ignoring it; it is between learning deliberately now or competing later against firms that did. The path is neither mysterious nor grandiose. Start by building intuition at the individual level, professionalize usage with a compact and secure stack, connect the first few workflows to own data and processes, and then scale what works with light, visible governance. Do that with discipline and the economics of the business begin to change: cycle times shrink, error rates fall, and managers spend more time on judgment and less on drudgery. Most importantly, the company should develop a habit of improvement that rivals cannot copy quickly because it lives in how people think and work, not just in the tools they use.

.jpg)